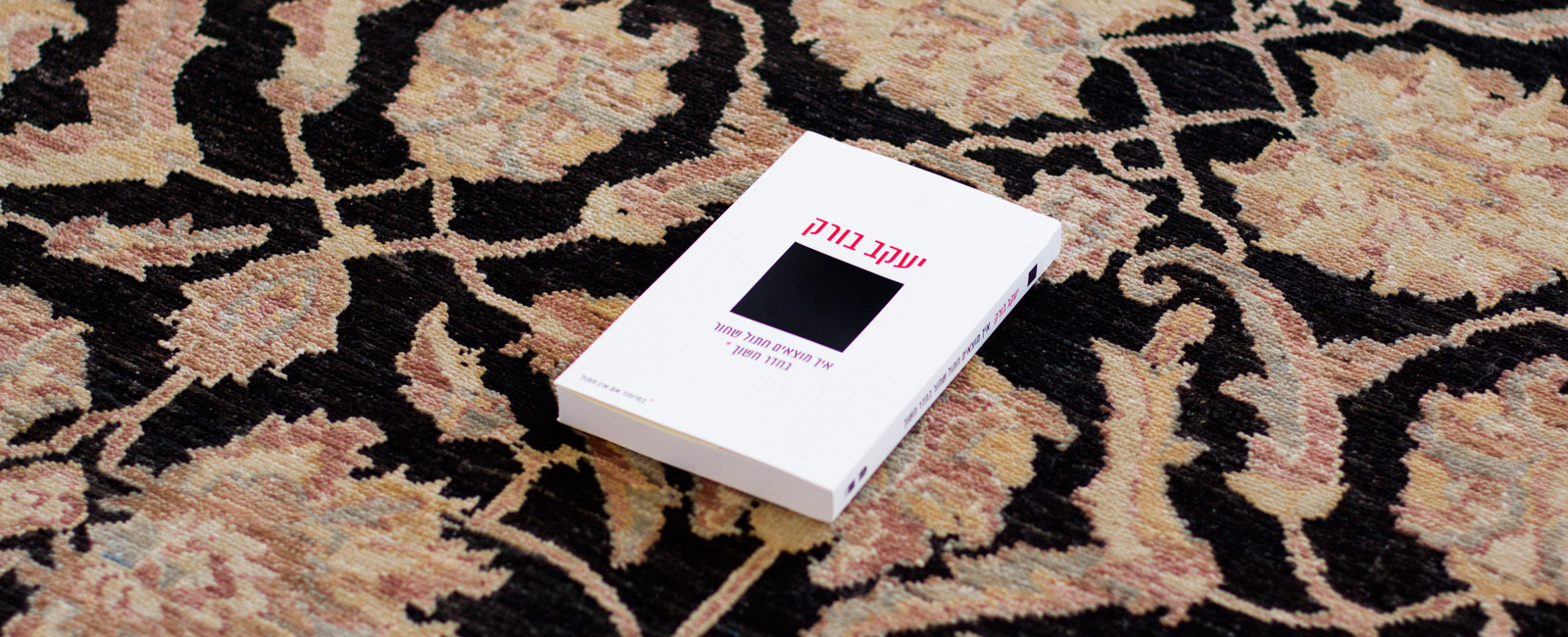

The English version of the book is available on Amazon (link)

Overview

How does One Find a Black Cat in a Dark Room?

- Why do those who lack a sense of humor keep on telling tasteless jokes?

- Are babies that are exposed to classical music in infancy more developed?

- Why do tall and slim people earn more?

- Can you move a table solely by the power of concentration?

- Why are more baby boys than baby girls born?

- What is the right moment to let go of the rope of a hot-air balloon about to take off?

- Why is one rifle of an execution squad equipped with a blank cartridge?

- What is the value of the writer’s corpse and that of his readers?

In a personal and witty style, already identified with the author in his first book “Do Chimpanzees Dream of Retirement” and his other best sellers, in his new book, Jacob Burak responds to all these questions. But make no mistake about his flowing and accessible style, nor about the author. How to find a Black Cat in a Dark Room is a subversive book by someone who is fully aware that his financial success is entailed in the price tag that society as a whole is requested to pay. Jacob Burak combines the latest and most updated behavioral research with deep social awareness and vast business experience to address what is for him, the most important question of all: How come that in an era of unprecedented economic growth, inequality of such dimensions has developed that it threatens our very existence as citizens. Burak sheds light on our weaknesses as human beings and on the weakness of the economy which is part of our day to day existential reality, crystallizing them into a disturbing image of a system which has lost its way. He also suggests that those who were smart enough to decipher the rules of the game, should return to the society a larger part of the fruits of their success and to his readers he suggests that they should consider being part of a new social order.

The book is filled with insights, many of which are most entertaining. Among others, we can learn what are the most effective exercises to strengthen our will-power; what is the secret of the resilience of urban legends; what is the most effective fundraising advertisement and why is it so difficult for us to change our opinion after receiving new information – almost as difficult as finding a black cat in a dark room.

excerpts

Cracking up

Or, how to improve the odds in Russian roulette

Crackers are flat, tasteless baked products, whose sole justification for existing is their low calorie content. A cracker really doesn’t have much of a life without a dip to go with it. Christmas crackers, on the other hand, are full of surprises and a source of excitement at any self-respecting holiday meal. At least, the sort held in British Commonwealth countries. Christmas crackers are comprised of three cardboard cylinders attached lengthwise and wrapped in shiny, colorful cellophane. They resemble giant candies. The crackers are opened with a single pull and make a slight popping sound in the process; this is produced by the cardboard strips connecting the cylinders, which have been coated with a special chemical substance. When the cracker opens, holiday favors spill out from the main cylinder onto the table − a paper hat, a tiny plastic toy, a note with a joke or saying printed on it, and other delightful surprises.

Now, imagine you have been invited to a party at which each of the six guests is offered a chance to draw a Christmas cracker from a barrel placed some distance away. The host informs you that there are six crackers in the barrel − five of them contain a check for an unusually large sum, but the remaining cracker has a bomb in it that will kill the person who opens it. This is the version of Russian roulette that Graham Greene’s characters face in his 1980 novella “Doctor Fischer of Geneva, or The Bomb Party.”

Dr. Fischer, who made his fortune by inventing a toothpaste, is fascinated by the greed of the wealthy. He spends his time planning parties at which rich guests are willing to go through an embarrassing series of humiliations just to win, by the end of the evening, a gift they could have easily bought for themselves. The bomb party is the climax of these events and the high point of the inquisitive doctor’s (and Greene’s ) powers of invention.

One of the guests, Mr. Kips, declines to participate and withdraws before the loaded game begins. And then, while another guest gathers the strength to approach the barrel, he is suddenly preempted by Mrs. Montgomery, a blue-haired American woman who runs over to the barrel screaming “Ladies first” and draws out a cracker. A check for 2 million Swiss francs peeks out when it opens. It seems that she rushed ahead after calculating the odds of her making it out safely with the check, and assumed that going first would give her the best chance. Her thinking sets a statistical chord trembling in the mind of Monsieur Belmont, who protests aloud: “We should have drawn for turns,” and feels that his chances of blowing up have now increased to one in five (previously it had been one in six ). After a slight hesitation, Monsieur Belmont draws a cracker and he too is rewarded with a check. The odds of exploding with the next cracker have gone up to one in four.

Mr. Jones, who has been contemplating suicide for some time, declares that he will draw the last cracker. The comments of the frightened guests convey the sense that the odds of drawing the bomb go up the higher their position in line. Is this the case? Peter Ayton, the clever psychology professor at City University London, recognized the research potential in the parties thrown by Greene’s host and analyzed the guests’ behavior using scientific tools. The question he wished to answer was whether there is importance to the order in which the guests draw the crackers; in other words: Did the guest who rushed to draw the first cracker have a better chance of coming out with a fat check in his hand instead of a severed ear?

The odds of drawing the bomb do indeed rise after the first two crackers have been pulled, but these odds are conditioned upon neither of them having exploded. The longer you wait, your chances of exploding become greater only if nobody has exploded before you. In fact, the longer you wait, the chance that someone will draw the bomb before you only increases, but Dr. Fischer’s guests evidently are not skilled at assessing risks. With your permission, a brief pause for a statistical exercise. The first guest had a 1/6 chance of drawing the fatal cracker and a 5/6 chance of getting a check. After the first guest drew his cracker, the second guest has a 1/5 chance of not surviving if the first guest survived an embarrassing explosion. Therefore, his chances of kissing the world goodbye are a product of combining the first guest’s chances of staying alive (5/6 ) with his own chances of drawing the bomb (1/5 ), i.e., 5/6 x 1/5, which is 1/6 exactly. Likewise, the third guest’s chances of getting blown up are 5/6 x 4/5 x 1/4; again, 1/6. Statistically speaking, it can be proved that for any number of guests and any number of crackers and bombs, the odds of a guest exploding do not depend on his position in line. Why then did the guests behave as if the order mattered?

Prof. Ayton explained the laws of “the bomb party” to 77 psychology students and asked them, among other things, where they would prefer to be positioned in line if they could choose their turn before the first cracker is drawn. Forty percent chose to be first and 42 percent preferred to go last. A clear preference for the extremes. Only 3 percent thought the position in line made no difference. The results of this study reflect the illusion shared by the bomb party guests that there is an advantage to being first in line.

When the students in the experiment were asked to explain their choices, those who opted to be first said that this gave them the greatest chance for survival, whereas those who favored the last place in line explained that in this way they would at least be certain of their fate (a mistake of course, since the choice is supposed to be made before the process begins ) or that they believed they could refuse to draw the final cracker. To neutralize this element, Ayton put together a new group of 70 students whom he presented with an addition to the earlier scenario: They must draw the cracker, or else Dr. Fischer would shoot whoever tries to get out of playing the cruel game. The results resembled the results of the first experiment, except that the final position in line became less popular.

Why did the participants in these experiments perceive the first place in line (and to a lesser degree the last ) as preferable to all the other options − even though a simple calculation shows that, as far as the odds of exploding go, there is no difference between the positions. One possibility, of course, is that the way the issue was presented gave rise to confusion. But the more interesting explanation is that the subjects had trouble imagining a bloody scenario in which a bomb goes off at an early stage in the process − a possibility the statistical calculation takes into account − and neglected this in assessing the odds.

The human difficulty of imagining a scenario in which the worst of all occurs is one I encountered as a young naval officer almost 40 years ago, right after the Yom Kippur War. I was asked to put my training in operations research to use in developing a simulation of the next war, in order to enable naval commanders to better estimate the order level of innovative sea-to-sea missiles. But the model I labored to develop suggested a procurement volume smaller than that which the navy’s experts in warfare theory deemed necessary. A reexamination of the model failed to alter the phenomenon, and the simulation consistently yielded more modest (but also cost-effective ) procurement requirements.

The mystery of that discrepancy was solved during a lengthy meeting of all those involved, in which the basic assumptions of the various assessment methods were discussed. While the human assessors had difficulty addressing the possibility of the navy losing a missile boat in battle, the indifferent computer that followed my model sank two boats in the first days of the fighting without shedding a tear. And as we know, a sunken boat does not need to be reequipped for the next battle.

Many are familiar with a peacetime example of the logical conundrum Ayton presents. In a well-known paradox, a student explains to his classmates why the teacher will not be able to give them the pop quiz she has promised for the following week. If she holds it on Friday, the student argues, then we will know about it on Thursday evening and the quiz won’t be a surprise. Thursday isn’t an option either, because if Friday is ruled out then Thursday will be the last possible day for the quiz. But then students would know on Wednesday evening that Thursday is out, and so on and so forth until the conclusion is reached that the pop quiz cannot take place at all.

This logic overlooks one important possibility: The quiz, the unpleasant scenario in this case, has already occurred. Ayton wrote a short article on the subject of the “surprise-party illusion” even before he conducted the empirical experiment described here.

In the article Ayton also referred to an autobiographical description by Greene, in which he recounted his personal experience with the game of Russian roulette. Ayton identified a misconception regarding the calculation of probabilities involved in this dangerous game in Greene’s account.

When he sent Greene the article, which in addition to the statistical calculations also proposed that Greene’s personal experience had served as the inspiration for the novella, he was surprised to receive the author’s reply: “It amused me, but I am afraid it was far too mathematical for me to follow.”

Trial and Error

Torture numbers and they’ll confess to anything.

− Gregg Easterbrook (writer, senior editor, The New Republic )

Last October we learned that research done by Dutch psychologist Diederik Stapel concerning stereotypes − research that often earned raves from colleagues and was called “too good to be true” − was in fact just that: not true. An investigative committee found that the senior researcher from Tilburg University did not let rigorous scientific methods get in the way of his desired results. The committee found that Stapel had published at least 30 scientific articles based on fabricated data, and its chairman said there were likely more. How is this possible?

Joseph Simmons from the Wharton School of the University of Pennsylvania and his colleagues propose an answer

in the October 2011 edition of the respected journal Psychological Science. Their thought-provoking article, entitled “False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant,” illuminates the incredible ease with which statistical tools may be manipulated to bolster behavioral science theories.

The authors charge that flexibility in data collection, a crucial aspect of

scientific research that does not receive full disclosure in many studies, enables findings to be portrayed as statistically significant when this is not actually the case. They place the blame on what they call “researcher degrees of freedom” that make it possible to prove almost any theory. Researchers are free to decide, among other things, on the size of the sample, when to stop collecting data and which irregular observations to exclude, and it is not uncommon for them to arrive at a definition of their hypothesis in the course of the study rather than beforehand. Proper (i.e., improper ) management of the datacollection phase can substantially increase the chance of obtaining a statistically-significant finding that is not scientifically supported. A significant finding is defined as one for which the likelihood of occurring by chance, rather than because of the explanation offered by the esearchers, does not exceed five percent.

The article’s authors also organized a study of their own to prove their argument. They asked one group of subjects to listen to the famous Beatles song “When I’m Sixty-Four.” Another group listened to “Kalimba,” an instrumental piece that is offered free with the purchase of Windows 7.0. All participants were asked to fill out a wide-ranging questionnaire about their opinions, tastes and preferences, and to state their date of birth. And the surprising result: Subjects who listened to “When I’m Sixty-Four” were found to be almost a year and a half younger than those who listened to the other song: not “felt younger,” but actually were younger. Statistical magic that borders on the discovery of the legendary Fountain of Youth.

How did this happen? Well, one reason is that the researchers tested “the effect of listening to the song” against a large number of variables − but selectively reported solely on the one connection that withstood the test of statistical significance (the other variables that appeared on the questionnaire were not mentioned ). Another reason is the flexibility that enabled the researchers to cease collecting data whenever they chose. In many behavioral studies, this decision is not made before the research is conducted, but while it is in progress.

The problem is clear: Statistical analysis may yield a finding that passes the required threshold for significance at a particular, incomplete stage of the study, but that falls below that threshold when data from further observations are added. This element of “degree of freedom” enables researchers to decide to stop at the “right” stage and point to a “statistical significance” that does not represent any genuine finding.

In the simulation conducted by the article’s authors, they examined the data accumulated after every 10 observations and stopped at the most convenient point for them − the point where, completely by chance, the average age gap between those who listened to the Beatles and the members of the control group was the highest.

Having demonstrated how research flexibility is undermining scientific precision, the article’s authors propose a series of guidelines for researchers, and reviewers on behalf of scientific journals, to adopt in order to meet a true scientific standard. These recommendations include more transparency in reporting and management of the study, disclosure of all of the tested variables, and determination of sample size prior to the start of data collecting.

Simmons et al show how scientific theories are liable to be backed up by unwarranted statistical support. Bearing in mind also that the editors of professional scientific journals are eager to publish “surprising findings,” it becomes easy to see how articles that present misleading findings can achieve major scientific and public visibility. The difficulty in replicating the results of such studies to reinforce or refute them is no hindrance to the editor who chooses to publish the problematic article. Most of the prestigious scientific journals do not publish articles about experiments that ended in failure or those that replicated previous experiments. Given these

circumstances, aggressive researchers gain a clear publicity advantage that puts their integrity to an even greater test.

But before we point an accusing finger at behavioral science researchers, who were the subject of Simmons’ article, it is important to understand that the behavior of researchers in other scientific fields is no different. Omitting observations that don’t suit the study’s premise, deciding not to publish results that contradict the researchers’ earlier work, or adjusting research methodology in order to satisfy those who are funding the study − these are not rare occurrences.

A comprehensive comparative survey conducted by Daniele Fanelli of the University of Edinburgh (see box) reveals that two percent of researchers admit to having engaged in scientific misconduct involving the modification, or even falsification, of data on at least one occasion. According to Fanelli, the phenomenon is most common in medical and drug-development research. And in fact, a survey of studies that were routinely submitted to the U.S. Food and Drug Administration from 1990-1997 found flaws and research weaknesses in 10-20 percent of them, with two percent said to involve significant distortion of the data or results.

But, again, lest we hasten to cast aspersions on the entire scientific community, remember that this phenomenon cannot be attributed solely to the ease with which research findings may be backed up with distorted statistics, to the interest that journals have in “hot” studies, or to the money available from interested funding sources. Like a car accident, a research accident does not occur in a vacuum. To the whole mix of factors behind the accident that were hovering in abeyance for some time must be added the final spark that transforms the probability into a certainty. This is the human factor− that cocktail of human pride and ambition and weaknesses − and it is by no means the exclusive province of the scientist.

5חזרה לראש העמוד